DeepSeek Model: Revolutionizing the Global Tech Landscape

DeepSeek has revolutionized the AI landscape by unveiling an open-source model that rivals and surpasses the performance of industry-leading models, all at a fraction of the cost. Their flagship DeepSeek model - DeepSeek R1 is a reasoning AI system that excels in complex problem-solving tasks, including mathematics and coding. Remarkably, DeepSeek R1 achieves this high level of performance while utilizing significantly fewer resources, approximately 2,000 Nvidia H800 GPUs, making it about 96% more cost-effective compared to models like ChatGPT o1.

What Is DeepSeek?

DeepSeek is a Chinese AI startup known for developing advanced, open-source reasoning AI models. It aims to push the boundaries of AI research and development, with a mission to create highly capable and versatile AI systems that can perform a wide range of tasks at human-like or superhuman levels.

Also read: DeepSeek R1 vs. ChatGPT o1: Who Is the Best AI Assistant?

DeepSeek Model: Rapid AI Evolution from Transformers to Distilled Supermodels

DeepSeek R1 seems to have come out of nowhere. But many DeepSeek models brought us to this point a model avalanche.

DeepSeek v1

A 67-billion-parameter transformer model focused on optimizing feedforward neural networks. While traditional in design, it laid the groundwork for rapid iteration.

DeepSeek v2

A 236-billion-parameter leap introduced two breakthroughs: multi-headed latent attention (enhancing context processing) and a mixture-of-experts (MoE) architecture. This combination drastically improved speed and performance, setting the stage for larger models.

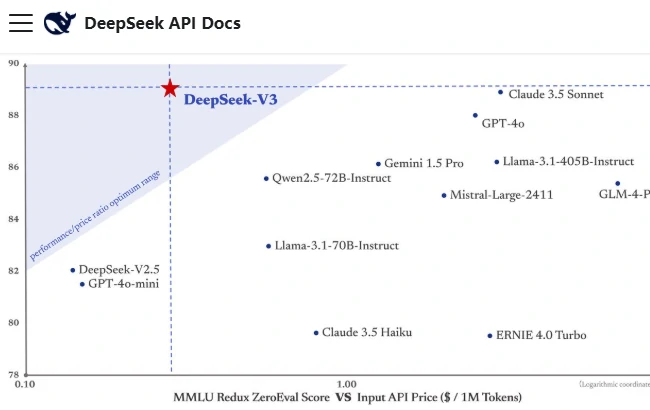

DeepSeek v3

Scaling to 671 billion parameters, v3 integrated reinforcement learning (RL) during training, and optimized GPU load balancing using NVIDIA's H800 clusters. This marked DeepSeek's shift toward combining scale with adaptive learning strategies.

R1-Zero

The first "reasoning model" in the lineup, R1-Zero abandoned supervised fine-tuning entirely, relying exclusively on RL. By rewarding desirable outputs and penalizing errors, the model refined its problem-solving abilities dynamically.

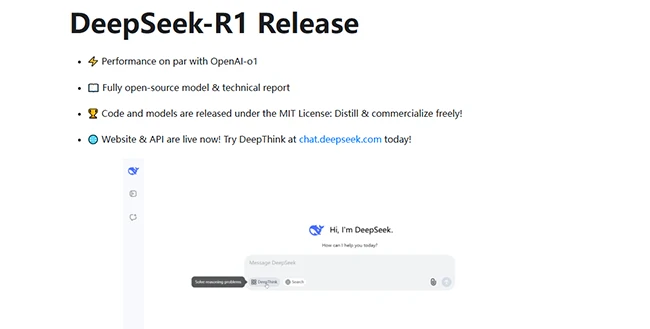

DeepSeek-R1

Building on R1-Zero, this hybrid model merged RL with supervised fine-tuning, achieving benchmark performance rivaling OpenAI's leading systems. Its versatility in tasks like coding and logic solidified DeepSeek Models.

How DeepSeek Model Groundbreaking Efficiency Works

Perhaps the biggest concern for everyone is how DeepSeek operates at such a comparatively low cost.

DeepSeek achieves its remarkably low operational costs by utilizing a fraction of the highly specialized NVIDIA chips required by its American competitors to train AI models.

For instance, DeepSeek engineers have reported using only 2,000 GPUs to train their DeepSeek V3 model. While Meta's latest open-source model, Llama 4, requires over 100,000 GPUs. This impressive efficiency is attributed to chain-of-thought reasoning and reinforcement learning, a feature introduced in DeepSeek V3.

DeepSeek Reinforcement Learning with Chain-of-Thought Reasoning

Unlike traditional models that improve through supervised or unsupervised learning, DeepSeek models use reinforcement learning which allows the model to discover the best problem-solving strategies by rewarding it for correct outputs, regardless of how it arrives at them.

Mixture of Experts (MoE) Architecture

DeepSeek R1 utilizes a Mixture of Experts (MoE) architecture, which reduces resource consumption by dividing the AI model into specialized sub-networks, or experts, and only activating the ones necessary for a given task, drastically reducing computational costs during training and speeds up performance during inference.

Conclusion

By utilizing advanced techniques like reinforcement learning, chain-of-thought reasoning, and MoE architecture, the DeepSeek model competes with industry giants and does so with fewer resources. DeepSeek stands as a testament to the potential of open-source AI systems with its remarkable efficiency and powerful reasoning capabilities. As DeepSeek continues to evolve and push the boundaries of AI, it sets a new standard for performance, scalability, and affordability in the rapidly advancing world of artificial intelligence.